![Writing complex macros in Rust: Reverse Polish Notation]()

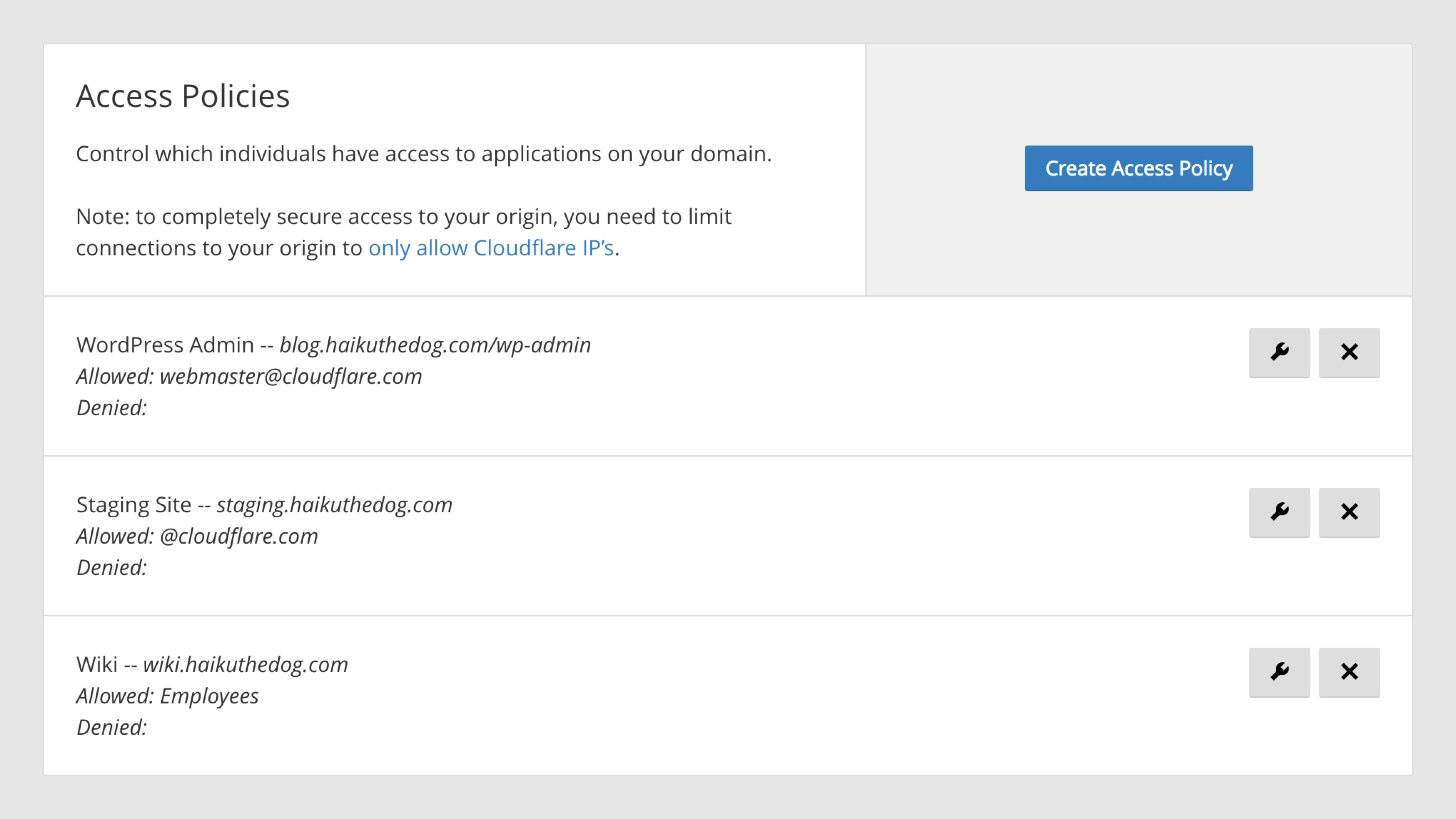

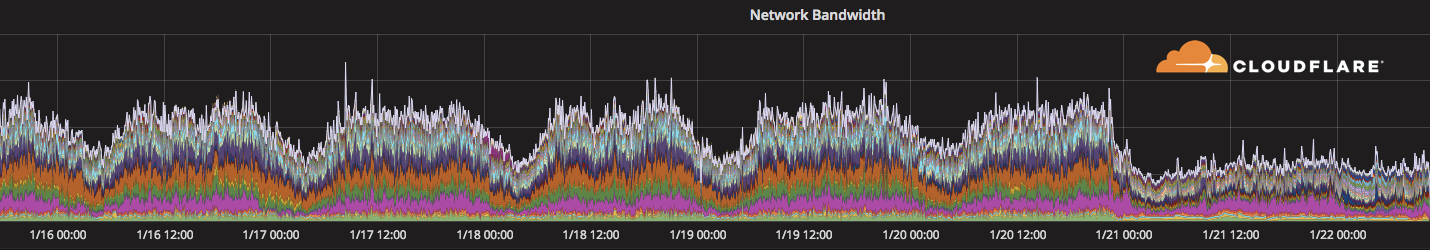

(This is a crosspost of a tutorial originally published on my personal blog)

Among other interesting features, Rust has a powerful macro system. Unfortunately, even after reading The Book and various tutorials, when it came to trying to implement a macro which involved processing complex lists of different elements, I still struggled to understand how it should be done, and it took some time till I got to that "ding" moment and started misusing macros for everything :) (ok, not everything as in the i-am-using-macros-because-i-dont-want-to-use-functions-and-specify-types-and-lifetimes everything like I've seen some people do, but anywhere it's actually useful)

![Writing complex macros in Rust: Reverse Polish Notation]()

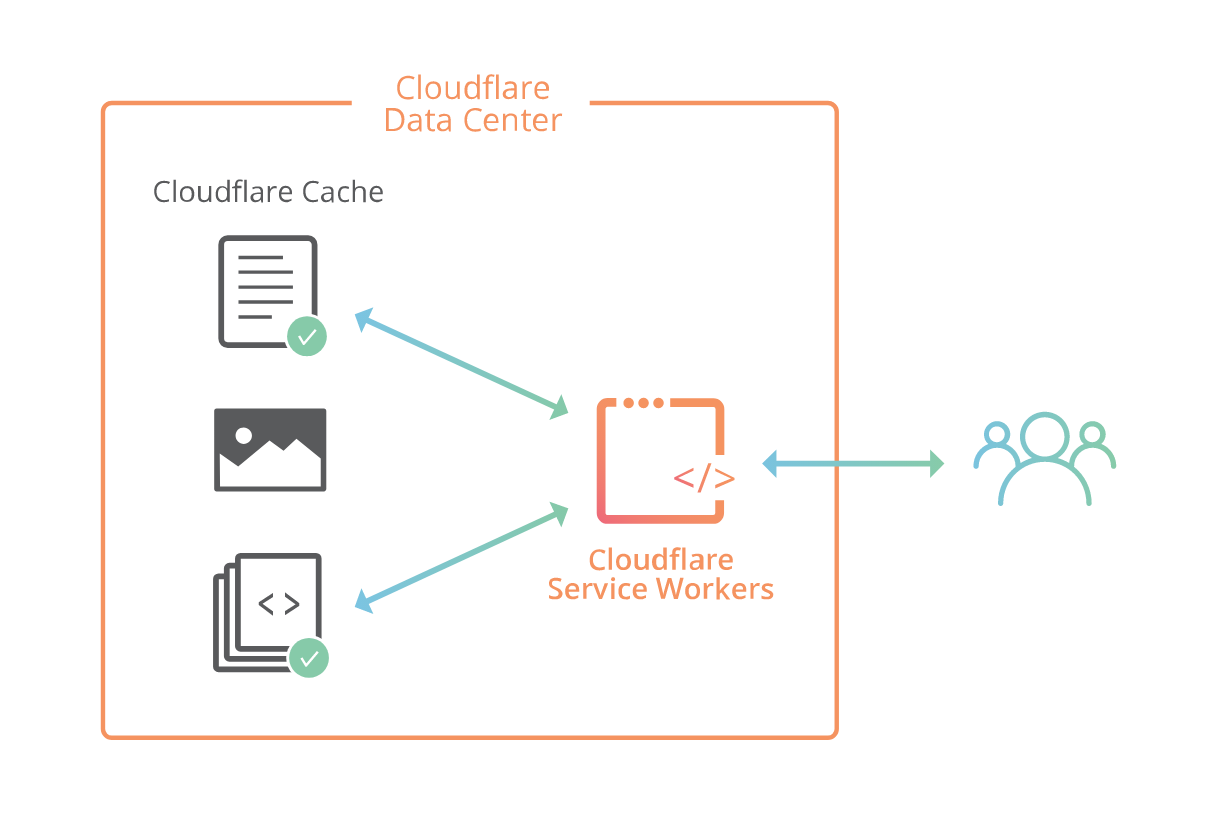

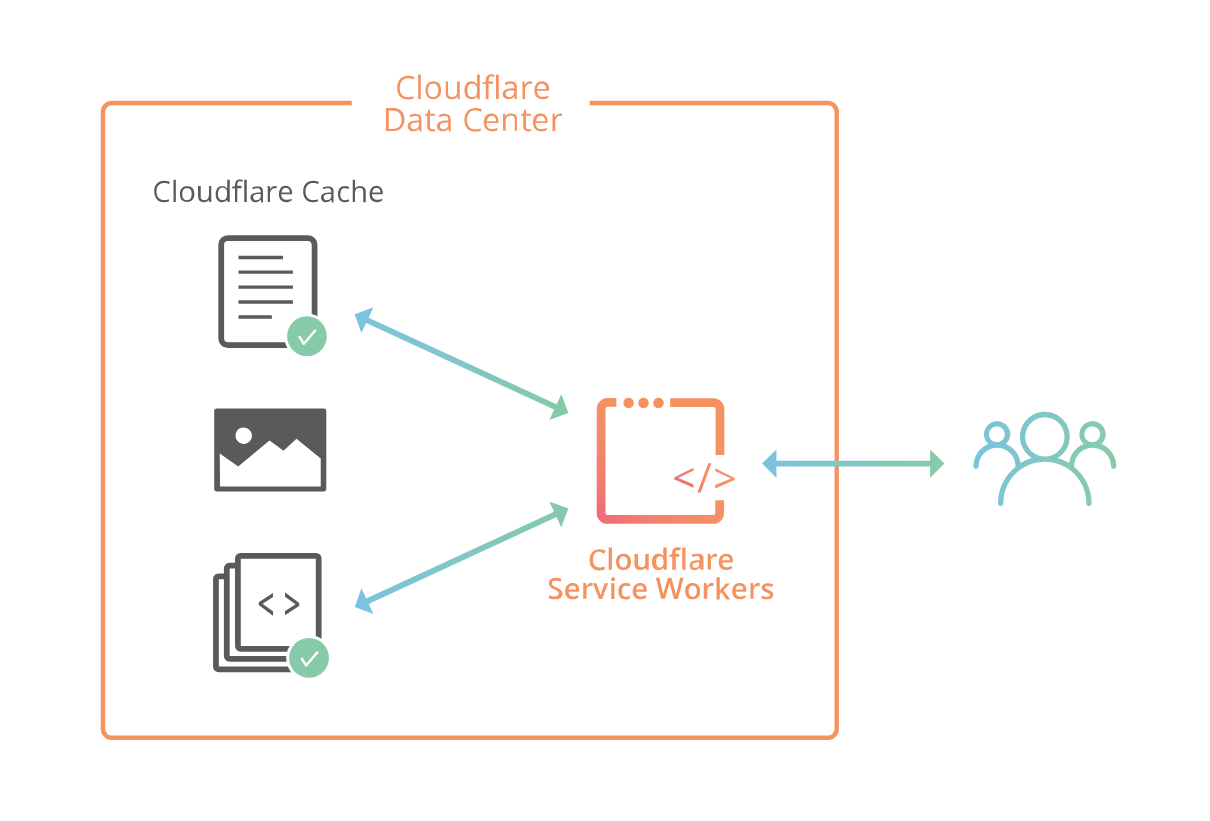

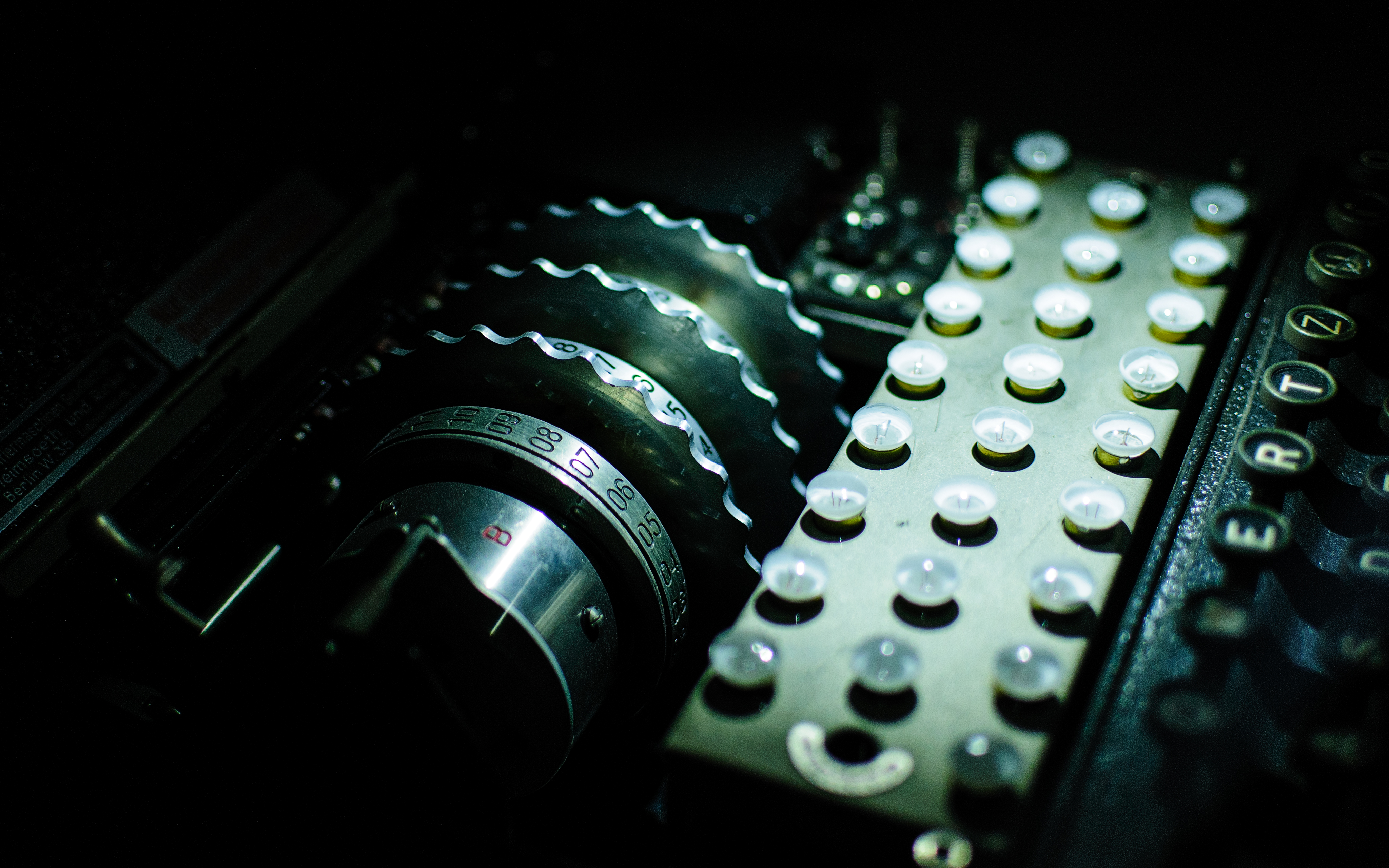

CC BY 2.0 image by Conor Lawless

So, here is my take on describing the principles behind writing such macros. It assumes you have read the Macros section from The Book and are familiar with basic macros definitions and token types.

I'll take a Reverse Polish Notation as an example for this tutorial. It's interesting because it's simple enough, you might be already familiar with it from school, and yet to implement it statically at compile time, you already need to use a recursive macros approach.

Reverse Polish Notation (also called postfix notation) uses a stack for all its operations, so that any operand is pushed onto the stack, and any [binary] operator takes two operands from the stack, evaluates the result and puts it back. So an expression like following:

2 3 + 4 *

translates into:

- Put

2 onto the stack.

- Put

3 onto the stack.

- Take two last values from the stack (

3 and 2), apply operator + and put the result (5) back onto the stack.

- Put

4 onto the stack.

- Take two last values from the stack (

4 and 5), apply operator * (4 * 5) and put the result (20) back onto the stack.

- End of expression, the single value on the stack is the result (

20).

In a more common infix notation, used in math and most modern programming languages, the expression would look like (2 + 3) * 4.

So let's write a macro that would evaluate RPN at compile-time by converting it into an infix notation that Rust understands.

macro_rules! rpn {

// TODO

}

println!("{}", rpn!(2 3 + 4 *)); // 20

Let's start with pushing numbers onto the stack.

Macros currently don't allow matching literals, and expr won't work for us because it can accidentally match sequence like 2 + 3 ... instead of taking just a single number, so we'll resort to tt - a generic token matcher that matches only one token tree (whether it's a primitive token like literal/identifier/lifetime/etc. or a ()/[]/{}-parenthesized expression containing more tokens):

macro_rules! rpn {

($num:tt) => {

// TODO

};

}

Now, we'll need a variable for the stack.

Macros can't use real variables, because we want this stack to exist only at compile time. So, instead, the trick is to have a separate token sequence that can be passed around, and so used as kind of an accumulator.

In our case, let's represent it as a comma-separated sequence of expr (since we will be using it not only for simple numbers but also for intermediate infix expressions) and wrap it into brackets to separate from the rest of the input:

macro_rules! rpn {

([ $($stack:expr),* ] $num:tt) => {

// TODO

};

}

Now, a token sequence is not really a variable - you can't modify it in-place and do something afterwards. Instead, you can create a new copy of this token sequence with necessary modifications, and recursively call same macro again.

If you are coming from functional language background or worked with any library providing immutable data before, both of these approaches - mutating data by creating a modified copy and processing lists with a recursion - are likely already familiar to you:

macro_rules! rpn {

([ $($stack:expr),* ] $num:tt) => {

rpn!([ $num $(, $stack)* ])

};

}

Now, obviously, the case with just a single number is rather unlikely and not very interesting to us, so we'll need to match anything else after that number as a sequence of zero or more tt tokens, which can be passed to next invocation of our macro for further matching and processing:

macro_rules! rpn {

([ $($stack:expr),* ] $num:tt $($rest:tt)*) => {

rpn!([ $num $(, $stack)* ] $($rest)*)

};

}

At this point we're still missing operator support. How do we match operators?

If our RPN would be a sequence of tokens that we would want to process in an exactly same way, we could simply use a list like $($token:tt)*. Unfortunately, that wouldn't give us an ability to go through list and either push an operand or apply an operator depending on each token.

The Book says that "macro system does not deal with parse ambiguity at all", and that's true for a single macros branch - we can't match a sequence of numbers followed by an operator like $($num:tt)* + because + is also a valid token and could be matched by the tt group, but this is where recursive macros helps again.

If you have different branches in your macro definition, Rust will try them one by one, so we can put our operator branches before the numeric one and, this way, avoid any conflict:

macro_rules! rpn {

([ $($stack:expr),* ] + $($rest:tt)*) => {

// TODO

};

([ $($stack:expr),* ] - $($rest:tt)*) => {

// TODO

};

([ $($stack:expr),* ] * $($rest:tt)*) => {

// TODO

};

([ $($stack:expr),* ] / $($rest:tt)*) => {

// TODO

};

([ $($stack:expr),* ] $num:tt $($rest:tt)*) => {

rpn!([ $num $(, $stack)* ] $($rest)*)

};

}

As I said earlier, operators are applied to the last two numbers on the stack, so we'll need to match them separately, "evaluate" the result (construct a regular infix expression) and put it back:

macro_rules! rpn {

([ $b:expr, $a:expr $(, $stack:expr)* ] + $($rest:tt)*) => {

rpn!([ $a + $b $(, $stack)* ] $($rest)*)

};

([ $b:expr, $a:expr $(, $stack:expr)* ] - $($rest:tt)*) => {

rpn!([ $a - $b $(, $stack)* ] $($rest)*)

};

([ $b:expr, $a:expr $(, $stack:expr)* ] * $($rest:tt)*) => {

rpn!([ $a * $b $(,$stack)* ] $($rest)*)

};

([ $b:expr, $a:expr $(, $stack:expr)* ] / $($rest:tt)*) => {

rpn!([ $a / $b $(,$stack)* ] $($rest)*)

};

([ $($stack:expr),* ] $num:tt $($rest:tt)*) => {

rpn!([ $num $(, $stack)* ] $($rest)*)

};

}

I'm not really fan of such obvious repetitions, but, just like with literals, there is no special token type to match operators.

What we can do, however, is add a helper that would be responsible for the evaluation, and delegate any explicit operator branch to it.

In macros, you can't really use an external helper, but the only thing you can be sure about is that your macros is already in scope, so the usual trick is to have a branch in the same macro "marked" with some unique token sequence, and call it recursively like we did in regular branches.

Let's use @op as such marker, and accept any operator via tt inside it (tt would be unambiguous in such context because we'll be passing only operators to this helper).

And the stack does not need to be expanded in each separate branch anymore - since we wrapped it into [] brackets earlier, it can be matched as any another token tree (tt), and then passed into our helper:

macro_rules! rpn {

(@op [ $b:expr, $a:expr $(, $stack:expr)* ] $op:tt $($rest:tt)*) => {

rpn!([ $a $op $b $(, $stack)* ] $($rest)*)

};

($stack:tt + $($rest:tt)*) => {

rpn!(@op $stack + $($rest)*)

};

($stack:tt - $($rest:tt)*) => {

rpn!(@op $stack - $($rest)*)

};

($stack:tt * $($rest:tt)*) => {

rpn!(@op $stack * $($rest)*)

};

($stack:tt / $($rest:tt)*) => {

rpn!(@op $stack / $($rest)*)

};

([ $($stack:expr),* ] $num:tt $($rest:tt)*) => {

rpn!([ $num $(, $stack)* ] $($rest)*)

};

}

Now any tokens are processed by corresponding branches, and we need to just handle final case when stack contains a single item, and no more tokens are left:

macro_rules! rpn {

// ...

([ $result:expr ]) => {

$result

};

}

At this point, if you invoke this macro with an empty stack and RPN expression, it will already produce a correct result:

Playground

println!("{}", rpn!([] 2 3 + 4 *)); // 20

However, our stack is an implementation detail and we really wouldn't want every consumer to pass an empty stack in, so let's add another catch-all branch in the end that would serve as an entry point and add [] automatically:

Playground

macro_rules! rpn {

// ...

($($tokens:tt)*) => {

rpn!([] $($tokens)*)

};

}

println!("{}", rpn!(2 3 + 4 *)); // 20

Our macro even works for more complex expressions, like the one from Wikipedia page about RPN!

println!("{}", rpn!(15 7 1 1 + - / 3 * 2 1 1 + + -)); // 5

Error handling

Now everything seems to work smoothly for correct RPN expressions, but for a macros to be production-ready we need to be sure that it can handle invalid input as well, with a reasonable error message.

First, let's try to insert another number in the middle and see what happens:

println!("{}", rpn!(2 3 7 + 4 *));

Output:

error[E0277]: the trait bound `[{integer}; 2]: std::fmt::Display` is not satisfied

--> src/main.rs:36:20

|

36 | println!("{}", rpn!(2 3 7 + 4 *));

| ^^^^^^^^^^^^^^^^^ `[{integer}; 2]` cannot be formatted with the default formatter; try using `:?` instead if you are using a format string

|

= help: the trait `std::fmt::Display` is not implemented for `[{integer}; 2]`

= note: required by `std::fmt::Display::fmt`

Okay, that definitely doesn't look helpful as it doesn't provide any information relevant to the actual mistake in the expression.

In order to figure out what happened, we will need to debug our macros. For that, we'll use a trace_macros feature (and, like for any other optional compiler feature, you'll need a nightly version of Rust). We don't want to trace println! call, so we'll separate our RPN calculation to a variable:

Playground

#![feature(trace_macros)]

macro_rules! rpn { /* ... */ }

fn main() {

trace_macros!(true);

let e = rpn!(2 3 7 + 4 *);

trace_macros!(false);

println!("{}", e);

}

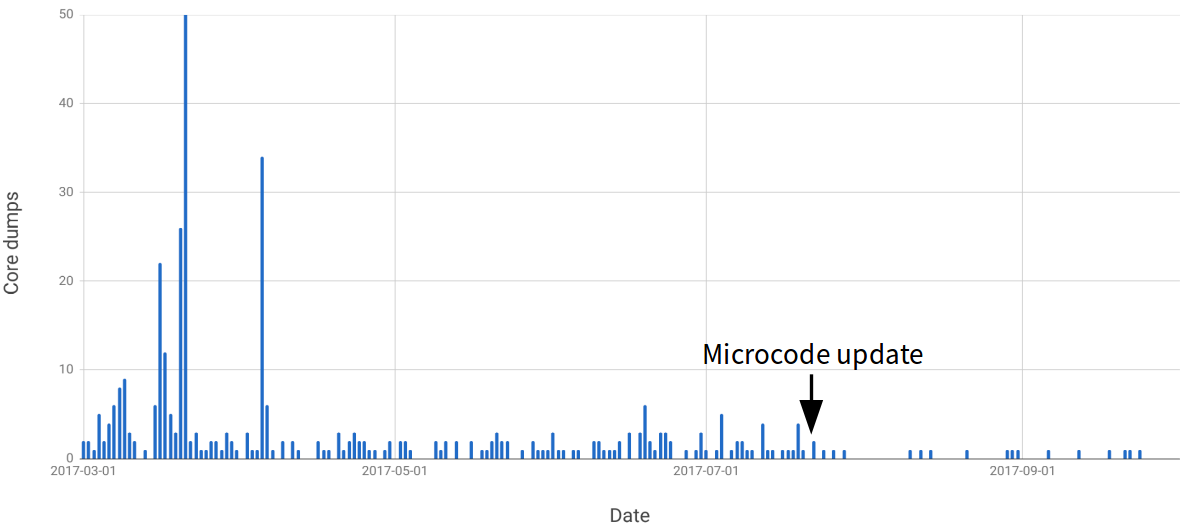

In the output we'll now see how our macro is being recursively evaluated step by step:

note: trace_macro

--> src/main.rs:39:13

|

39 | let e = rpn!(2 3 7 + 4 *);

| ^^^^^^^^^^^^^^^^^

|

= note: expanding `rpn! { 2 3 7 + 4 * }`

= note: to `rpn ! ( [ ] 2 3 7 + 4 * )`

= note: expanding `rpn! { [ ] 2 3 7 + 4 * }`

= note: to `rpn ! ( [ 2 ] 3 7 + 4 * )`

= note: expanding `rpn! { [ 2 ] 3 7 + 4 * }`

= note: to `rpn ! ( [ 3 , 2 ] 7 + 4 * )`

= note: expanding `rpn! { [ 3 , 2 ] 7 + 4 * }`

= note: to `rpn ! ( [ 7 , 3 , 2 ] + 4 * )`

= note: expanding `rpn! { [ 7 , 3 , 2 ] + 4 * }`

= note: to `rpn ! ( @ op [ 7 , 3 , 2 ] + 4 * )`

= note: expanding `rpn! { @ op [ 7 , 3 , 2 ] + 4 * }`

= note: to `rpn ! ( [ 3 + 7 , 2 ] 4 * )`

= note: expanding `rpn! { [ 3 + 7 , 2 ] 4 * }`

= note: to `rpn ! ( [ 4 , 3 + 7 , 2 ] * )`

= note: expanding `rpn! { [ 4 , 3 + 7 , 2 ] * }`

= note: to `rpn ! ( @ op [ 4 , 3 + 7 , 2 ] * )`

= note: expanding `rpn! { @ op [ 4 , 3 + 7 , 2 ] * }`

= note: to `rpn ! ( [ 3 + 7 * 4 , 2 ] )`

= note: expanding `rpn! { [ 3 + 7 * 4 , 2 ] }`

= note: to `rpn ! ( [ ] [ 3 + 7 * 4 , 2 ] )`

= note: expanding `rpn! { [ ] [ 3 + 7 * 4 , 2 ] }`

= note: to `rpn ! ( [ [ 3 + 7 * 4 , 2 ] ] )`

= note: expanding `rpn! { [ [ 3 + 7 * 4 , 2 ] ] }`

= note: to `[(3 + 7) * 4, 2]`

If we carefully look through the trace, we'll notice that the problem originates in these steps:

= note: expanding `rpn! { [ 3 + 7 * 4 , 2 ] }`

= note: to `rpn ! ( [ ] [ 3 + 7 * 4 , 2 ] )`

Since [ 3 + 7 * 4 , 2 ] was not matched by ([$result:expr]) => ... branch as a final expression, it was caught by our final catch-all ($($tokens:tt)*) => ... branch instead, prepended with an empty stack [] and then the original [ 3 + 7 * 4 , 2 ] was matched by generic $num:tt and pushed onto the stack as a single final value.

In order to prevent this from happening, let's insert another branch between these last two that would match any stack.

It would be hit only when we ran out of tokens, but stack didn't have exactly one final value, so we can treat it as a compile error and produce a more helpful error message using a built-in compile_error! macro.

Note that we can't use format! in this context since it uses runtime APIs to format a string, and instead we'll have to limit ourselves to built-in concat! and stringify! macros to format a message:

Playground

macro_rules! rpn {

// ...

([ $result:expr ]) => {

$result

};

([ $($stack:expr),* ]) => {

compile_error!(concat!(

"Could not find final value for the expression, perhaps you missed an operator? Final stack: ",

stringify!([ $($stack),* ])

))

};

($($tokens:tt)*) => {

rpn!([] $($tokens)*)

};

}

The error message is now more meaningful and contains at least some details about current state of evaluation:

error: Could not find final value for the expression, perhaps you missed an operator? Final stack: [ (3 + 7) * 4 , 2 ]

--> src/main.rs:31:9

|

31 | compile_error!(concat!("Could not find final value for the expression, perhaps you missed an operator? Final stack: ", stringify!([$($stack),*])))

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...

40 | println!("{}", rpn!(2 3 7 + 4 *));

| ----------------- in this macro invocation

But what if, instead, we miss some number?

Playground

println!("{}", rpn!(2 3 + *));

Unfortunately, this one is still not too helpful:

error: expected expression, found `@`

--> src/main.rs:15:14

|

15 | rpn!(@op $stack * $($rest)*)

| ^

...

40 | println!("{}", rpn!(2 3 + *));

| ------------- in this macro invocation

If you try to use trace_macros, even it won't expand the stack here for some reason, but, luckily, it's relatively clear what's going on - @op has very specific conditions as to what should be matched (it expects at least two values on the stack), and, when it can't, @ gets matched by the same way-too-greedy $num:tt and pushed onto the stack.

To avoid this, again, we'll add another branch to match anything starting with @op that wasn't matched already, and produce a compile error:

Playground

macro_rules! rpn {

(@op [ $b:expr, $a:expr $(, $stack:expr)* ] $op:tt $($rest:tt)*) => {

rpn!([ $a $op $b $(, $stack)* ] $($rest)*)

};

(@op $stack:tt $op:tt $($rest:tt)*) => {

compile_error!(concat!(

"Could not apply operator `",

stringify!($op),

"` to the current stack: ",

stringify!($stack)

))

};

// ...

}

Let's try again:

error: Could not apply operator `*` to the current stack: [ 2 + 3 ]

--> src/main.rs:9:9

|

9 | compile_error!(concat!("Could not apply operator ", stringify!($op), " to current stack: ", stringify!($stack)))

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

...

46 | println!("{}", rpn!(2 3 + *));

| ------------- in this macro invocation

Much better! Now our macro can evaluate any RPN expression at compile-time, and gracefully handles most common mistakes, so let's call it a day and say it's production-ready :)

There are many more small improvements we could add, but I'd like to leave them outside this demonstration tutorial.

Feel free to let me know if this has been useful and/or what topics you'd like to see better covered on Twitter!

CC BY-SA 2.0 image by Michele M. F.

CC BY-SA 2.0 image by Michele M. F.